Contents

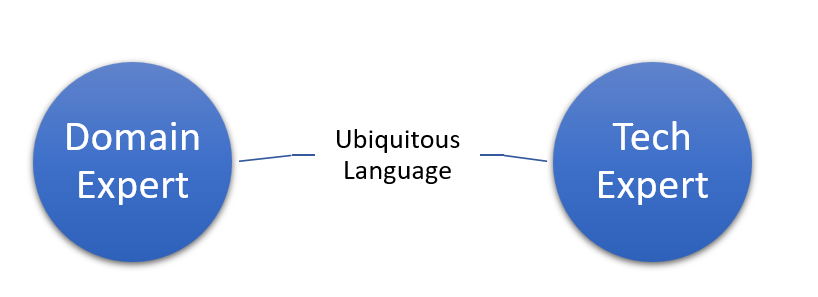

In the landscape of software development, the need for effective communication and collaboration between technical and non-technical stakeholders has become paramount. Enter Domain Driven Design (DDD), a methodology that places a strong emphasis on understanding and modeling the problem domain. At the heart of DDD lies a concept known as Ubiquitous Language, a shared and common vocabulary that acts as a bridge between developers and domain experts.

Introduction Ubiquitous Language DDD

Defining Ubiquitous Language DDD

Ubiquitous Language refers to the pervasive presence of language in all aspects of our lives, facilitated by advancements in technology. It transcends traditional communication boundaries, connecting individuals across the globe.

Importance in Various Fields

From business to education, the significance of Ubiquitous Language is undeniable. This section explores how this phenomenon has transformed the way we communicate and interact.

Origins and Evolution

Historical Background

Tracing the roots of Ubiquitous Language takes us on a journey through history. Understanding its evolution provides insights into the current landscape of global communication.

Evolution in Technology

The symbiotic relationship between technology and language evolution is explored here. How has the digital age shaped the way we express ourselves?

Ubiquitous Language in Everyday Life

Explore the fascinating realm of Ubiquitous Language in Everyday Life as we unravel the threads of communication woven seamlessly into our daily experiences. Discover how words and phrases become an integral part of our surroundings, shaping the way we perceive and interact with the world. From casual conversations to digital dialogues, delve into the omnipresence of language and its profound impact on our shared human experience.

Language Trends

An exploration of contemporary language trends—slang, abbreviations, and the impact of pop culture on our linguistic landscape.

Impact on Communication

How has Ubiquitous Language influenced the way we communicate in our daily lives? From casual conversations to professional discourse, its impact is profound.

Ubiquitous Language in Technology

Integration in Smart Devices

Smartphones, smart speakers, and other devices have become fluent in Ubiquitous Language. This section examines how these technologies have adapted to our linguistic preferences.

AI and Natural Language Processing

The intersection of artificial intelligence and language is discussed here. How are machines learning to understand and respond to human language?

Ubiquitous Language in Business

Marketing Strategies

Ubiquitous Language plays a pivotal role in modern marketing. Explore how businesses leverage linguistic trends to connect with diverse audiences.

Global Communication

Breaking down language barriers is crucial for global enterprises. How does Ubiquitous Language facilitate seamless communication across borders?

Challenges and Criticisms

Privacy Concerns

As language becomes more integrated into technology, concerns about privacy emerge. This section delves into the ethical considerations surrounding Ubiquitous Language.

Standardization Issues

With diverse linguistic trends, standardizing communication becomes a challenge. How can we bridge the gap and ensure effective communication?

Benefits of Ubiquitous Language DDD

What are the Benefits of Ubiquitous Language DDD ?

Enhanced Connectivity

Ubiquitous Language fosters a sense of connection. Explore how it enhances relationships, both personal and professional.

Improved Accessibility

Breaking down language barriers improves accessibility to information. This section highlights how Ubiquitous Language contributes to a more inclusive world.

Future Trends in Ubiquitous Language

Emerging Technologies

What technologies are on the horizon that will further integrate language into our daily lives? This section explores the future landscape of Ubiquitous Language.

Potential Developments

Predictions about how Ubiquitous Language might evolve in the coming years. What breakthroughs can we anticipate?

How to Adapt to Ubiquitous Language

Tips for Effective Communication

Navigating the landscape of Ubiquitous Language requires some skills. This section provides practical tips for effective communication in this digital age.

Cultural Considerations

Language is deeply rooted in culture. Understanding cultural nuances is essential for successful communication—how can we navigate this terrain?

Ubiquitous Language in Education

Language Learning Apps

The role of technology in language education is discussed. How are language learning apps incorporating Ubiquitous Language into their platforms?

Impact on Traditional Education

Traditional educational institutions are not immune to the influence of Ubiquitous Language. Explore how it has impacted language education in schools and universities.

Social Impacts of Ubiquitous Language

Influence on Social Media

Social media platforms are language hubs. This section explores how Ubiquitous Language shapes online conversations and trends.

Shaping Cultural Norms

Language is a powerful tool for shaping cultural norms. How does Ubiquitous Language contribute to the evolution of societal values?

Ubiquitous Language and Personalization

Customized User Experiences

The personalization of language experiences is on the rise. How are businesses tailoring their language strategies to create unique user experiences?

Challenges and Opportunities

While personalization brings opportunities, it also presents challenges. Explore the delicate balance between customization and maintaining inclusivity.

Ethical Considerations

Responsible Use of Language Data

As technology processes vast amounts of language data, ethical considerations come to the forefront. How can we ensure responsible use?

Avoiding Discrimination and Bias

Language can inadvertently perpetuate biases. This section discusses how to mitigate discriminatory language and biases in Ubiquitous Language.

Case Studies

Successful Implementations

Explore real-world examples of successful integration of Ubiquitous Language. What can we learn from these case studies?

Lessons Learned

What challenges have organizations faced in adopting Ubiquitous Language, and what lessons can be gleaned from their experiences?

Conclusion

In conclusion, Ubiquitous Language DDD is not just a linguistic trend but a transformative force shaping our interconnected world. From personal communication to global business, its impact is profound. As we continue to navigate this linguistic landscape, understanding its nuances becomes imperative for effective and ethical communication.

FAQs

- Is Ubiquitous Language the same as a universal language?

- While Ubiquitous Language is widespread, it doesn’t refer to a single universal language. Instead, it encompasses the various ways language is integrated into our daily lives.

- How can businesses leverage Ubiquitous Language for marketing?

- Businesses can tailor their marketing strategies to align with current linguistic trends, ensuring their messages resonate with diverse audiences.

- What challenges do educators face in adapting to Ubiquitous Language in traditional classrooms?

- Educators may struggle to balance traditional teaching methods with the evolving linguistic landscape, requiring a thoughtful approach to language education.

- What ethical considerations are associated with the use of Ubiquitous Language in technology?

- Ethical considerations include privacy concerns, responsible use of language data, and the potential for perpetuating biases through language algorithms.

- Can Ubiquitous Language truly bridge cultural gaps in communication?

- While Ubiquitous Language facilitates communication, understanding cultural nuances remains essential for effective cross-cultural interactions.