Contents

Introduction

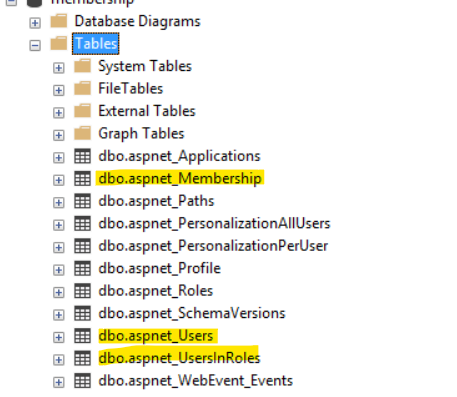

In the provided SQL script, the data entry order for the tables is as follows:

- aspnet_Membership: Records are inserted first into this table.

- aspnet_Users: Records are inserted after inserting records into the

aspnet_Membershiptable. - aspnet_UsersInRoles: Records are inserted last, after inserting records into both the

aspnet_Membershipandaspnet_Userstables.

This order ensures that any foreign key constraints between these tables are respected, as records in child tables (aspnet_Users and aspnet_UsersInRoles) reference records in the parent table (aspnet_Membership).

In a typical ASP.NET Membership schema, the aspnet_Membership, aspnet_Users, and aspnet_UsersInRoles tables share the UserId column as a common key. Here’s a brief description of the relationships:

- aspnet_Users: This table contains user information and has a primary key

UserId. - aspnet_Membership: This table contains membership-specific information for users and has a foreign key

UserIdreferencing theaspnet_Userstable. - aspnet_UsersInRoles: This table maps users to roles and has a foreign key

UserIdreferencing theaspnet_Userstable.

ASP.NET Membership Schema Overview

The ASP.NET Membership schema provides a framework for managing user authentication and authorization in an ASP.NET application. Here’s a brief overview of the key tables involved:

- aspnet_Users: Stores basic user information.

- aspnet_Membership: Stores membership-specific details, linked to the

aspnet_Userstable viaUserId. - aspnet_UsersInRoles: Maps users to roles, linked to the

aspnet_Userstable viaUserId.

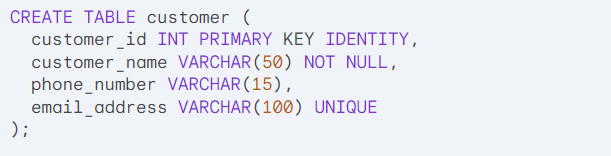

Table Details

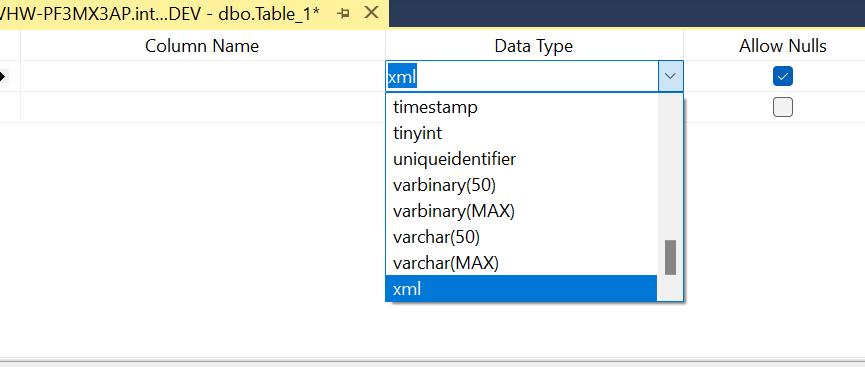

1. aspnet_Users

- UserId (uniqueidentifier, Primary Key): Unique identifier for each user.

- ApplicationId (uniqueidentifier): Identifier for the application to which the user belongs.

- UserName (nvarchar): User’s username.

- LoweredUserName (nvarchar): Lowercase version of the username for case-insensitive searches.

- MobileAlias (nvarchar): Optional mobile alias.

- IsAnonymous (bit): Indicates if the user is anonymous.

- LastActivityDate (datetime): The last time the user was active.

2. aspnet_Membership

- UserId (uniqueidentifier, Primary Key, Foreign Key): References

aspnet_Users.UserId. - ApplicationId (uniqueidentifier): Identifier for the application to which the membership belongs.

- Password (nvarchar): Encrypted user password.

- PasswordFormat (int): Format of the password (e.g., hashed, encrypted).

- PasswordSalt (nvarchar): Salt used for hashing the password.

- Email (nvarchar): User’s email address.

- PasswordQuestion (nvarchar): Security question for password recovery.

- PasswordAnswer (nvarchar): Answer to the security question.

- IsApproved (bit): Indicates if the user is approved.

- IsLockedOut (bit): Indicates if the user is locked out.

- CreateDate (datetime): The date the membership was created.

- LastLoginDate (datetime): The last time the user logged in.

- LastPasswordChangedDate (datetime): The last time the password was changed.

- LastLockoutDate (datetime): The last time the user was locked out.

- FailedPasswordAttemptCount (int): Count of failed password attempts.

- FailedPasswordAttemptWindowStart (datetime): Start of the period for counting failed password attempts.

- FailedPasswordAnswerAttemptCount (int): Count of failed attempts to answer the password question.

- FailedPasswordAnswerAttemptWindowStart (datetime): Start of the period for counting failed password answer attempts.

- Comment (nvarchar): Additional comments about the membership.

3. aspnet_UsersInRoles

- UserId (uniqueidentifier, Foreign Key): References

aspnet_Users.UserId. - RoleId (uniqueidentifier): Identifier for the role.

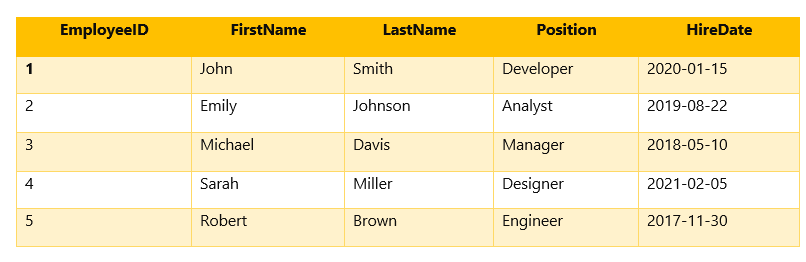

Example: add n records to aspnet Users tables

-- Inserting 4000 records into aspnet_Membership, aspnet_Users, and aspnet_UsersInRoles tables

-- Inserting records into aspnet_Membership table

DECLARE @counter INT = 1;

WHILE @counter <= 4000

BEGIN

INSERT INTO aspnet_Membership (UserId, ApplicationId, Password, PasswordFormat, PasswordSalt, Email, PasswordQuestion, PasswordAnswer, IsApproved, IsLockedOut, CreateDate, LastLoginDate, LastPasswordChangedDate, LastLockoutDate, FailedPasswordAttemptCount, FailedPasswordAttemptWindowStart, FailedPasswordAnswerAttemptCount, FailedPasswordAnswerAttemptWindowStart, Comment)

VALUES ('UserID_' + CAST(@counter AS VARCHAR), 'ApplicationID_' + CAST(@counter AS VARCHAR), 'Password_' + CAST(@counter AS VARCHAR), 1, 'Salt_' + CAST(@counter AS VARCHAR), 'email_' + CAST(@counter AS VARCHAR) + '@example.com', 'Question_' + CAST(@counter AS VARCHAR), 'Answer_' + CAST(@counter AS VARCHAR), 1, 0, GETDATE(), GETDATE(), GETDATE(), GETDATE(), 0, GETDATE(), 0, GETDATE(), 'Comment_' + CAST(@counter AS VARCHAR));

SET @counter = @counter + 1;

END;

-- Inserting records into aspnet_Users table

SET @counter = 1;

WHILE @counter <= 4000

BEGIN

INSERT INTO aspnet_Users (UserId, ApplicationId, UserName, LoweredUserName, MobileAlias, IsAnonymous, LastActivityDate)

VALUES ('UserID_' + CAST(@counter AS VARCHAR), 'ApplicationID_' + CAST(@counter AS VARCHAR), 'UserName_' + CAST(@counter AS VARCHAR), LOWER('UserName_' + CAST(@counter AS VARCHAR)), 'MobileAlias_' + CAST(@counter AS VARCHAR), 0, GETDATE());

SET @counter = @counter + 1;

END;If SQL script considering UserId and ApplicationId are of type uniqueidentifier use NEWID() for generate uniqueidentifier