Artificial Intelligence (AI) has transformed the way we interact with machines. AI can be used to generate emails, diagnose ailments, and many more tasks. AI-based systems, particularly those making use of Large Language Models (LLMs), require prompts to function, and these prompts will heavily influence the desired outcomes. Generally, a prompt is the input you will provide to an AI model so that it can develop an output.

Let’s look at the major types of prompts in AI, along with applicable real-world examples in areas such as business, healthcare, education, and software development.

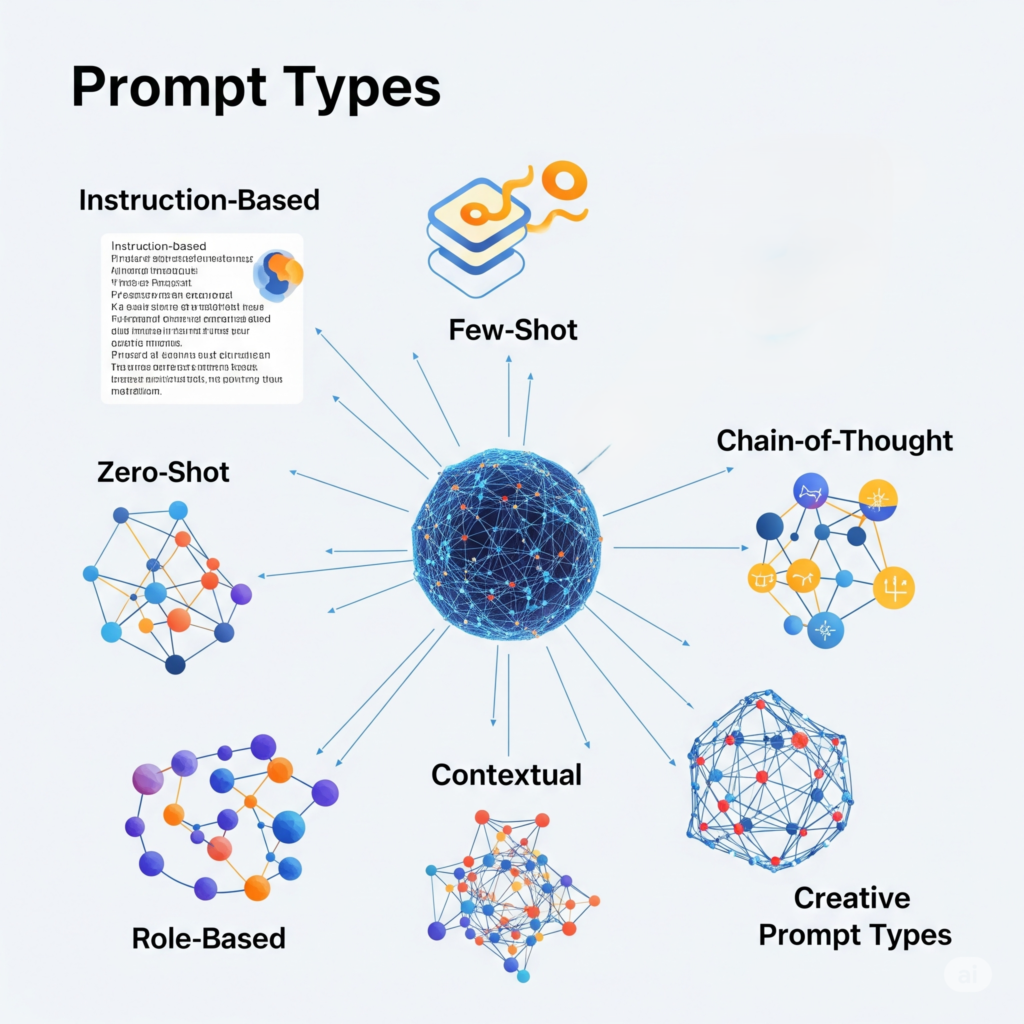

7 Types of Prompts in AI

Instruction-Based Prompts

Instruction-based prompts are direct commands or questions can tell an AI model exactly what task to perform. These prompts usually do not provide examples, but instead give a clear, explicit instruction to guide the AI’s behaviour.

💼 Business Example:

Write a professional email to follow up with a client after a sales meeting.

Output: A well-structured email including greeting, meeting summary, and next steps.

🎓 Education Example:

Summarise the causes of World War I in under 100 words.

✅ Advantages

| Advantage | Description |

|---|---|

| Gives the AI a clear direction, reducing confusion and minimising unpredictable outputs. | Easy to write and understand — no need for examples or formatting. |

| Efficiency | Saves time by going straight to the point — ideal for quick tasks like writing an email or listing steps. |

| Clarity | Works well with tasks the AI is already trained to perform — e.g., summarisation, translation, grammar correction. |

| Good for Common Tasks | Works well with tasks the AI is already trained to perform — e.g., summarization, translation, grammar correction. |

| Faster Output | Because it’s straightforward, the AI can generate a response quickly without needing context. |

❌ Disadvantages

| Disadvantage | Description |

|---|---|

| Lacks Flexibility | Without examples, the AI may interpret the task too literally or narrowly. |

| Not Ideal for Complex Tasks | For more nuanced or creative outputs, instruction alone might not be enough. |

| Tone May Be Off | If you don’t specify tone (e.g., formal, casual, friendly), the output might not match your intent. |

| Inconsistent Formatting | If the task involves formatting or structure, the lack of examples might result in inconsistent results. |

| Assumes Prior Knowledge | The AI assumes it understands what you mean, which may lead to incorrect or incomplete answers if the prompt isn’t clear enough. |

Visual flowchart

Few-Shot Prompts

In these prompts, we need to give a few examples to teach the model the pattern or format.

📊 Marketing Example:

Headline: Save Big on Laptops — Description: Limited-time deals on top brands.

Headline: Fresh Organic Fruits — Description: Order healthy, farm-fresh produce now.

Headline: [Prompt AI fills this in with relevant product ad]

✅ Advantages

| Advantage | Description |

|---|

| Better Contextual Understanding | Examples help AI grasp the desired style, format, and type of output more accurately. |

| Flexibility | Enables AI to adapt to nuanced or complex tasks by showing different example patterns. |

| Less Need for Fine-Tuning | Reduces the need for costly model retraining by guiding AI through examples in the prompt. |

| Improved Output Quality | Leads to more consistent and relevant responses aligned with provided examples. |

| Quick to Implement | Adding examples in the prompt is faster than training or adjusting model parameters. |

❌ Disadvantages

| Disadvantage | Description |

|---|

| Prompt Length Limitations | Examples consume input space, limiting room for the actual query, especially in models with max token limits. |

| Example Selection Sensitivity | Quality of output depends heavily on the relevance and representativeness of examples provided. |

| Not Always Perfect | May fail to cover all variations needed, leading to imperfect or biased outputs. |

| Cost and Speed | Longer prompts increase computation cost and slow response times. |

| Scalability Issues | Crafting examples for many different tasks can be time-consuming and difficult to maintain. |

Zero-Shot Prompts

In here no need to provide any examples, just want to write the task.

🏥 Healthcare Example:

Explain the symptoms of Type 2 Diabetes in simple language.

📢 Customer Service Example:

Write a response to a customer complaint about a delayed shipment.

How Zero-Shot Prompts Work:

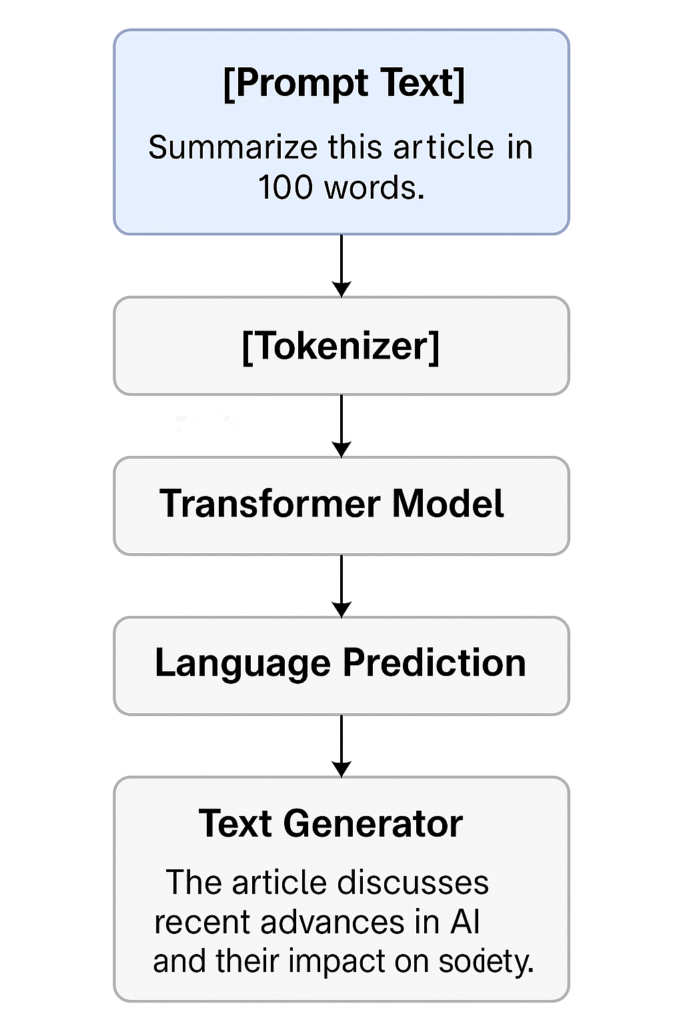

User Formulates the Instruction (The Prompt):

- The user creates a text prompt that directly asks the LLM

- This prompt includes:

- A clear and concise instruction or question.

- The input data for the process.

Example Prompt: “Translate the following English sentence to French: ‘Hello, how are you?'”

LLM Receives the Prompt:

- The complete prompt (instruction + input) comes into the large language model. The model works on this as a single sequence of tokens.

Model Processes the Instruction and Input:

- The LLM, based on its training data (which includes billions of text samples covering a wide range of topics, languages, and tasks), analyses the instruction.

- It identifies keywords, understands the intent (e.g., “translate,” “summarise,” “classify,” “answer a question”), and recognises the input data.

- The model then leverages the patterns, relationships, and knowledge it has learned during its pre-training to fulfil the instruction for the given input. It’s essentially performing a “best guess” based on its existing understanding of language and the world.

- For instance, in the translation example, the model accesses its internal representations of English and French vocabulary, grammar, and translation patterns learned during pre-training.

Model Generates the Output:

- The LLM produces a sequence of tokens as its response, answers the instruction or completes the task specified in the prompt.

- The output is a direct result of the model applying its generalised knowledge to the specific instruction, without having seen any task-specific examples in the current interaction.

Example Output (for the translation prompt): “Bonjour, comment allez-vous ?”

✅ Advantages

| Advantage | Description |

| No Training Data Required | This saves immense time and resources. |

| Versatility and Flexibility | This makes them highly adaptable to new problems and growing information, offering a versatile solution for various natural language processing (NLP) applications. |

| Rapid Prototyping | Can rapidly test different approaches and iterations without the overhead of data collection and model training, accelerating the development cycle. |

| Reduced Development Costs | Reduces the financial costs associated with developing and deploying AI solutions, making advanced NLP capabilities more accessible. |

| Handles Rare Categories/Tasks | The model can infer based on its general understanding rather than relying on specific examples. |

❌ Disadvantages

| Disadvantage | Description |

| Lower Accuracy/Reliability | Short of the accuracy achieved by models fine-tuned on task-specific data. It may struggle with nuanced interpretations or highly specific domain knowledge that was not sufficiently captured during its pre-training. |

| Sensitivity to Prompt Phrasing | Small changes in phrasing, keywords, or the inclusion of specific instructions can significantly alter the output |

| Lack of Specificity/Context | Without task-specific examples, the model may lack the necessary context or specific domain knowledge to provide highly accurate or detailed responses. |

| Potential for Hallucinations | Since they are not grounded in specific training examples for the task, they might make educated guesses can be wrong. |

| Scalability Challenges | Each new task might require unique prompt engineering, and maintaining a library of effective prompts can become complex as the number of tasks grows. Fine-tuning might still be necessary for complex, production-grade applications that demand high accuracy and reliability. |

| Bias Amplification | If the pre-training data contains biases, these biases can be amplified in zero-shot scenarios, leading to unfair or discriminatory outputs, as the model doesn’t have specific task-based training to mitigate these biases. |

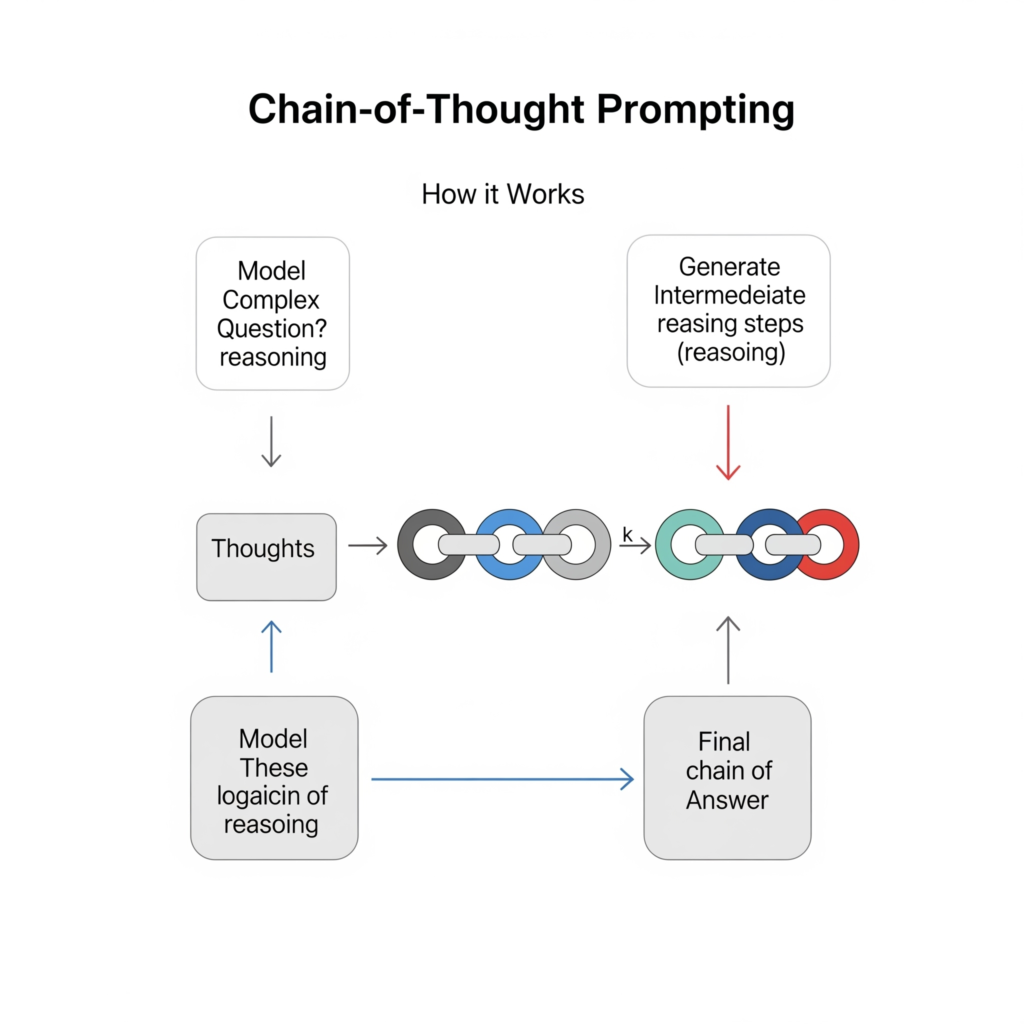

Chain-of-Thought Prompts

These guide the AI to explain the response step-by-step.

⚖️ Legal Assistant Example:

Is it legal to record phone calls in California? Explain your answer in steps.

Here are the advantages and disadvantages of Chain-of-Thought (CoT) prompts, described simply:

✅ Advantages

| Advantage | Description |

| Improved Accuracy | By breaking down a problem into smaller steps, the model can think through the solution more clearly, leading to more correct answers, especially for complex tasks like math or reasoning. |

| Better Reasoning | CoT helps the model show its “thought process.” This makes it more likely to follow logical steps, similar to how a human solves a problem, reducing errors from jumping straight to an answer. |

| Transparency and Explainability | You can see the intermediate steps the model took to reach its conclusion. |

| Handles Complex Tasks | CoT is very effective for tasks that require multiple steps of reasoning, like multi-step math problems, complex coding, or intricate logical puzzles, where a direct answer might be too difficult for the model to generate accurately. |

| Reduced Hallucinations | By forcing the model to explain its reasoning, it’s less likely to make up facts or generate nonsensical information, as it has to justify each step of its “thought” process. |

| Less Data for Fine-tuning | In some cases, CoT can make models perform better on new tasks even with less specific training data, because the prompting guides the model to use its existing knowledge more effectively for reasoning. |

❌ Disadvantages

| Disadvantage | Description |

| Increased Prompt Length | CoT prompts are often much longer than standard prompts because they include instructions for thinking step-by-step. This uses up more of the model’s input space (token limit), which can be a problem for very long or complex tasks. |

| Higher Computational Cost | If the model makes a mistake in an early step, all subsequent steps will likely be incorrect, leading to the wrong final answer. It can also include incorrect reasoning steps. |

| Still Susceptible to Errors | Not guarantee a perfect answer. If the model makes a mistake in an early step, all subsequent steps will likely be incorrect, leading to the wrong final answer. |

| Requires Careful Prompt Engineering | You need to guide the model on how to think step-by-step, which might require experimentation and refinement. |

| Not Always Necessary | It adds unnecessary complexity and cost without providing significant benefits. |

| Potential for “Garbage In, Garbage Out” | The quality of the input heavily influences the output. |

Role-Based Prompts

These prompt type give the AI to specific identity or role to such as a chef, doctor, or any other specific role helt to shape the tone and content.

🧑🍳 Food Blogger Example:

You are a chef. Recommend a healthy breakfast recipe for diabetics.

Process of Role-Based Prompts

Define the Role:

- The first and most important step is to clearly explain the role you want the LLM to adopt. This involves specifying:

- Who it is: (e.g., “You are a marketing expert,” “Loyer,” “Doctor”)

- Their expertise/knowledge: (e.g., “You have deep knowledge of ancient Roman history,” “You are an expert Python programmer.”)

- Their style/tone: (e.g., “Respond in a formal tone,” “Use informal language,” “Be concise and direct.”)

- Their goals/constraints (optional but helpful): (e.g., “Your goal is to simplify complex topics,” “You must always provide three actionable tips.”)

- Example Role Definition: “You are a professional travel blogger with a passion for sustainable tourism. Your writing style is engaging, informative, and slightly adventurous. Your goal is to inspire readers to explore eco-friendly travel options.”

State the Role in the Prompt:

- You preface your main request or question to the LLM with this role definition. It’s usually the very first part of your prompt. This sets the stage for the entire interaction.

- Example Prompt Opening: “You are a professional travel blogger with a passion for sustainable tourism. Your writing style is engaging, informative, and slightly adventurous. Your goal is to inspire readers to explore eco-friendly travel options. Now, write an article about the benefits of choosing local accommodations over large hotel chains.“

Provide the Main Request/Query:

- After establishing the role, you then provide the actual task, question, or topic you want the LLM to address. This part is your core instruction.

- Example Main Request: “Write an article about the benefits of choosing local accommodations over large hotel chains.”

LLM Processes with Role in Mind:

- When the LLM receives this combined prompt, it internalises the assigned role. It activates the knowledge, vocabulary, stylistic patterns, and logical frameworks associated with that role from its pre-training.

- It effectively filters response generation through the role.

LLM Generates Role-Consistent Output:

- The LLM generates a response that not only addresses your main request but also adheres to the specified role, including:

- Content: Focusing on information relevant to the role’s expertise.

- Tone: Matching the emotional and formal quality of the role.

- Style: Using vocabulary, sentence structure, and descriptive language appropriate for the persona.

- Perspective: Presenting the information from the viewpoint of the assigned role.

- Example Output (from the travel blogger example): The LLM would produce an article that is engaging, highlights environmental and community benefits, perhaps uses evocative travel language, and encourages readers to consider eco-friendly choices, all reflecting the specified travel blogger role.

Here are the advantages and disadvantages of Role-Based Prompts:

✅ Advantages

| Advantage | Description |

| Tailored Tone and Style | This ensures the output perfectly matches the desired communication context, from formal reports to casual social media posts. |

| Improved Relevance and Focus | This leads to more focused and insightful answers that align with the expertise and perspective of the assigned persona, reducing irrelevant information. |

| Enhanced Creativity and Specificity | While asking it to be a “legal advisor” will result in legally precise (though not binding) language. |

| Better Control Over Output | You can dictate not only what is said but also how it’s said, making the LLM’s responses more predictable and aligned with user expectations. |

| Simulation of Interactions | You can have the LLM act as a customer service agent, a sales representative, a historical figure, or a fictional character, making it useful for training, testing, or creative writing. |

| Simplifies Complex Instructions | Making the prompt shorter and easier to write. |

❌ Disadvantages

| Disadvantage | Description |

| Potential for Misinterpretation of Role | Responses that don’t quite fit the specific persona you intended, especially if the role is not commonly represented in its training data. |

| Can Reinforce Stereotypes/Bias | For example, asking it to be a “CEO” might lead to responses that lean towards traditionally male characteristics if that’s what’s prevalent in its training. |

| May Limit Scope Unintentionally | Information or perspectives that a more general query would have included, even if that information would be beneficial for the user. |

| “Over-Acting” or Exaggeration | This can make the output sound unnatural or forced. |

| Increased Prompt Length/Complexity | Defining a role adds to the length of the prompt. Contributing to token usage and potentially higher computational costs for complex scenarios. |

| Doesn’t Guarantee Factual Accuracy | Provide incorrect information, even when adopting an authoritative persona. Users must still verify critical information. |

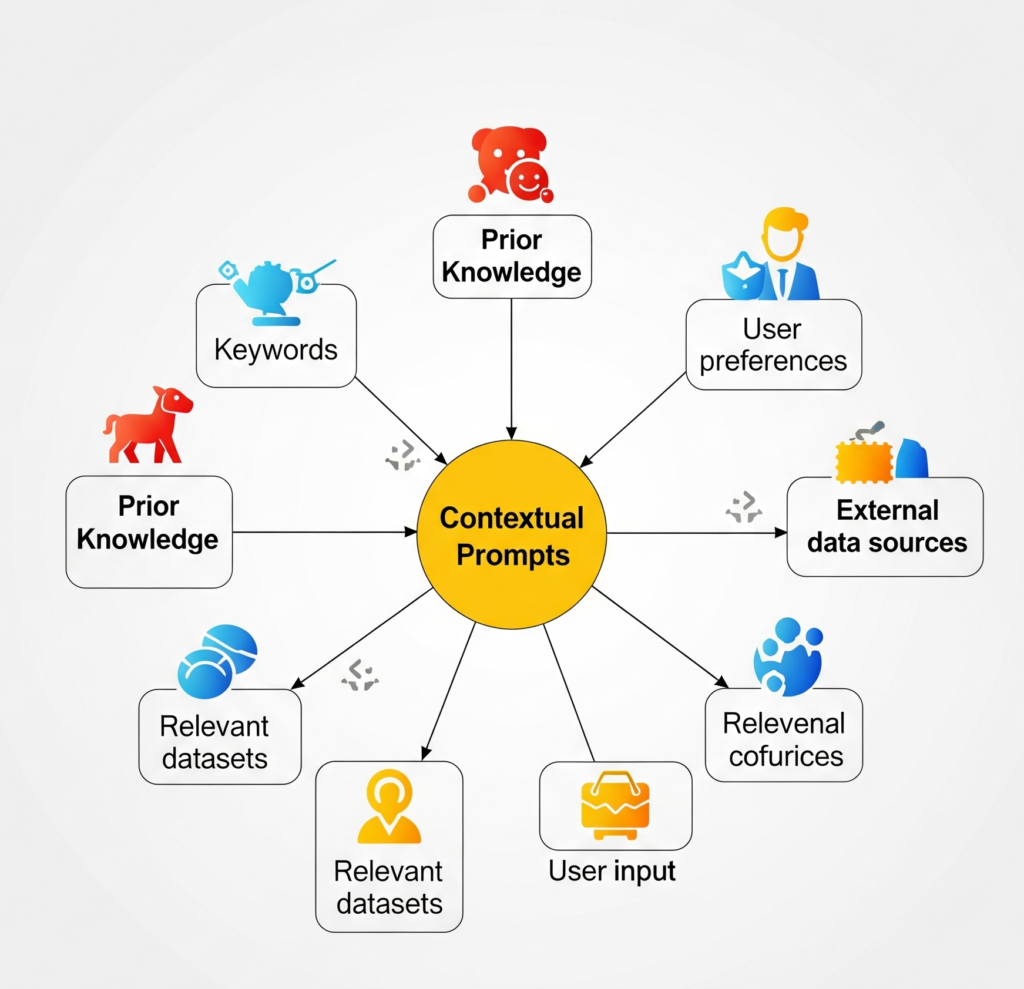

Contextual Prompts

In this prompt type, include relevant background information to relevant and more secure response.

📈 Business Meeting Assistant Example:

Earlier, we discussed Q2 revenue trends. Now summarise Q3 projections based on past growth.

Here are the advantages and disadvantages of Contextual Prompts:

✅ Advantages

| Advantage | Simple Description |

| Highly Relevant Answers | Avoiding generic or off-topic responses. |

| Reduced Ambiguity | By providing background information, the prompt clarifies what the user is asking, helping the model understand the exact intent. |

| Improved Accuracy | Provide factually correct details within the given context. |

| Handles Complex Scenarios | It allows the model to process and respond to questions that require understanding of a specific situation, document, or conversation history. |

| Better for Follow-Up Questions | Continuity and provide coherent answers for a series of related questions within the same provided context. |

❌ Disadvantages

| Disadvantage | Simple Description |

| Increased Prompt Length | Including a lot of context makes the prompt much longer, using up more tokens, which can be costly and slow. |

| Context Window Limitations | If the context is too long, parts of it will be ignored, leading to incomplete or incorrect answers. |

| Quality Depends on Context | If the provided context is inaccurate, incomplete, or poorly written output can be incorrect. |

| Requires Careful Preparation | Irrelevant information in the context can confuse the model. |

| No External Knowledge | If the answer is outside the given text, it won’t be able to provide it. |

Creative Prompts

These are open-ended and encourage imagination.

🎨 Storytelling App Example:

Write a short story about a cat who becomes the mayor of a small village.

Here are the advantages and disadvantages of Creative Prompts with short, simple descriptions:

✅ Advantages

| Advantage | Simple Description |

| Generates Novel Ideas | Helps the AI come up with unique, imaginative, and out-of-the-box concepts that might not otherwise appear. |

| Breaks Writer’s Block | Provides a starting point or inspiration when a user is stuck or doesn’t know where to begin. |

| Explores New Perspectives | Encourages the AI to view a topic from unusual angles or develop unexpected connections. |

| Stimulates Imagination | Leads to more vivid descriptions, inventive plots, or original artistic expressions from the AI. |

| Fun and Engaging | Makes the interaction with the AI more enjoyable and less like a straightforward question-and-answer session. |

❌ Disadvantages

| Disadvantage | Simple Description |

| Unpredictable Output | The results can sometimes be too abstract, nonsensical, or completely different from what was vaguely intended. |

| Requires More Refinement | Often needs further prompts or editing to shape the creative output into something truly useful or coherent. |

| Can Lack Practicality | The generated ideas might be highly imaginative but not feasible or relevant for real-world applications or specific goals. |

| Risk of “Hallucination” | Creative prompts can sometimes push the AI to generate entirely fabricated or illogical information in its pursuit of originality. |

| Depends on AI’s Capabilities | The quality of creative output highly depends on the specific AI model’s training and its ability to understand and interpret abstract requests. |