3 Steps SEO on Google Blogger: Comprehensive Guide

Contents

SEO on Google Blogger

Search engine optimization (SEO) is the process of increasing the quality and quantity of website traffic by increasing the visibility of a website or a web page to users of a web search engine like a Google, Bing, and Yahoo etc.

SEO refers to the development of unpaid results such as “natural” or “organic” results and excludes direct traffic/visitors and the purchase of paid placement.

SEO may target different kinds of searches, including image search, video search, academic search, news search, and industry-specific vertical search engines.

Now we are looking for how to apply SEO on Google Blogger

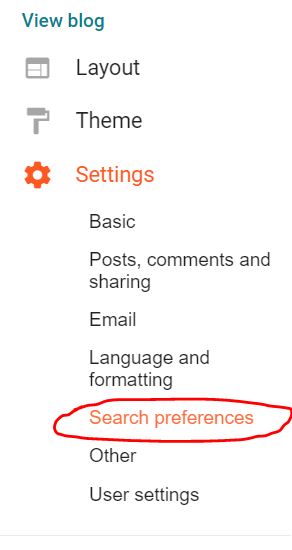

Step 1: Enable search description

- Title tags: The title tag of a webpage is a crucial factor. It often summarizes the content and helps Google understand the topic.

- Headings: Headings (H1, H2, etc.) within the webpage structure also play a role. Google might use these headings to identify key points and topics.

- Content: The content itself is analyzed for keywords, relevance to the search query, and overall theme.

Select “Yes” and Save changes

Now you can Add Post wise meta tag search description

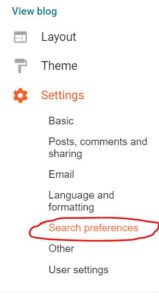

Step 2: Enable custom robots.txt content

Enabling Custom Robots.txt Content

A robots.txt file is a text file placed on your website’s root directory that instructs web robots (like search engine crawlers) on how to access and index your content. By default, most web hosting platforms don’t require any specific action to enable a custom robots.txt file. You just need to create the file and upload it to your root directory.

Here’s a breakdown of the process:

- Create the robots.txt file: You can use a simple text editor like Notepad or TextEdit to create a file named “robots.txt”.

- Add your desired directives: Inside the file, you can add specific directives for search engine crawlers. Here’s an example with some common directives and explanations

- Upload the file: Use your FTP client or your web hosting platform’s file manager to upload the “robots.txt” file to the root directory of your website. This is typically the same directory where your main website files (like index.html) reside.

How to Do?

Go to the https://ctrlq.org/blogger/

Enter you blogger URL and Generate Sitemap

Copy Paste the Result in following text area and Save changes

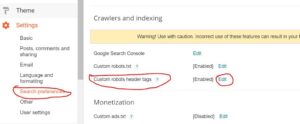

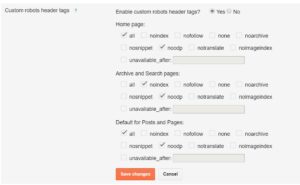

Step 3: Enable Custom robots header tags

1. Accessing Robots.txt:

The most common way to implement custom robots directives is through a robots.txt file. This file resides in the root directory of your website. You can access it using an FTP client or your website’s file manager.

2. Adding Directives:

Within the robots.txt file, you can specify instructions for search engine crawlers using directives. Here’s an example with actual data:

# Disallow all crawling except for the homepage and contact page

User-agent: *

Disallow: /

# Allow crawling of the homepage and contact page

Allow: /

Allow: /contact-us.html

# Instruct Googlebot to crawl every page only once a week

Crawl-delay: 7

# Tell search engines not to index a specific directory

Disallow: /images/

In this example:

User-agent: *– This line applies the following directives to all search engine crawlers.Disallow: /– This disallows crawling of all directories and files on the website except for the root (/).Allow: /– This specifically allows crawling of the homepage (/).Allow: /contact-us.html– This allows crawling of the contact page (/contact-us.html).Crawl-delay: 7– This instructs Googlebot to wait 7 days between crawls of each page.Disallow: /images/– This tells search engines not to index the “images” directory.

3. Advanced Directives:

There are many other directives available for even finer control. Here are some additional options with data:

Noindex:– Prevents a specific page from being indexed (e.g.,Noindex: /login.php).Nofollow:– Instructs search engines not to follow links on a page (e.g.,Nofollow: /affiliate-links.html).Sitemap: https://www.yourwebsite.com/sitemap.xml– Specifies the location of your website’s sitemap for better crawling.

4. Important Considerations:

- Robots.txt is a suggestion, not a rule. Search engines can choose to ignore it.

- Use custom robots tags strategically to avoid accidentally blocking important content.

- Test any changes made to robots.txt using tools like Google Search Console to ensure they don’t negatively impact your website’s search engine visibility.

How to Do ? Select as following and Save Change

Conclusion

SEO on Google Blogger is a gradual process that requires careful planning, diligent execution, and ongoing refinement. By following these three essential steps—keyword research and optimization, quality content creation, and on-page optimization—you can unlock the full potential of your blog and achieve lasting success in the competitive world of online publishing.